Here’s a detailed blog post on Pooling in Convolutional Neural Networks (CNNs):

🌀 What is Pooling in Convolutional Neural Networks (CNNs)? – A Detailed Guide

Convolutional Neural Networks (CNNs) have revolutionized computer vision tasks like image classification, face recognition, and object detection. One of the key operations in CNNs that helps these models work efficiently is pooling.

But what exactly is pooling? Why is it used? And how does it affect a neural network?

In this blog post, we’ll break down the concept of pooling, explain how it works, and why it’s a crucial part of CNN architecture.

🔍 What is Pooling?

Pooling (also known as subsampling or downsampling) is a technique used in CNNs to reduce the spatial size of the feature maps.

It helps:

- Reduce the number of parameters and computations

- Control overfitting

- Make the model more robust to small changes or noise in the input (like slight shifts or distortions in an image)

🔧 How Pooling Works

In a CNN, pooling is applied after a convolution layer. It operates on small patches of the feature map, typically using a sliding window (like 2×2 or 3×3), and replaces the patch with a single value.

There are several types of pooling:

🔹 1. Max Pooling (most common)

Max pooling takes the maximum value from the region covered by the filter.

Example:

For a 2×2 window:

[3 2]

[1 4] → Max = 4

This window is slid across the image (usually with a stride of 2), and only the highest value in each patch is kept.

Benefits:

- Captures the strongest features

- Helps retain important information while reducing size

🔹 2. Average Pooling

Average pooling takes the average value in the window instead of the maximum.

Example:

[3 2]

[1 4] → Avg = (3+2+1+4)/4 = 2.5

Usage:

Average pooling is less common in modern CNNs but may be used when smoother output is desired, or in specific architectures like some variants of ResNet.

🔹 3. Global Pooling

- Global Max Pooling or Global Average Pooling reduces the entire feature map into a single number.

- It’s often used just before the output layer to flatten the data.

🧠 Why Use Pooling?

Here’s why pooling layers are so important in CNNs:

✅ 1. Dimensionality Reduction

Pooling reduces the width and height of the feature maps, making the network faster and less memory-intensive.

✅ 2. Translation Invariance

Pooling makes CNNs less sensitive to the exact position of features. Even if an object in an image shifts slightly, the pooled features may remain the same.

✅ 3. Overfitting Control

By reducing the number of trainable parameters, pooling helps prevent the model from overfitting to the training data.

📊 Example: Pooling in Action

Let’s say you have a 4×4 feature map:

1 3 2 4

5 6 1 2

3 2 0 1

1 2 3 4

Apply 2×2 Max Pooling with a stride of 2:

Result:

6 4

3 4

You’ve just reduced a 4×4 map to a 2×2 map—retaining the most important information.

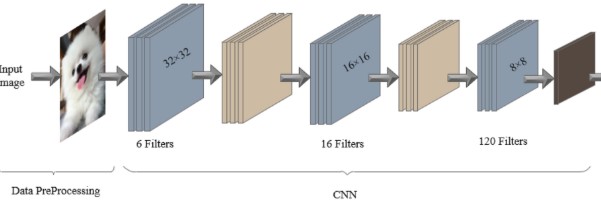

🏗️ Where Does Pooling Fit in a CNN?

Here’s a typical structure of a CNN:

- Input Layer – The raw image

- Convolution Layer – Extracts features

- Activation Function (e.g., ReLU) – Introduces non-linearity

- Pooling Layer – Reduces spatial dimensions

- (Repeat convolution + pooling layers)

- Fully Connected Layer – Makes predictions

- Output Layer – Final result (e.g., class label)

📌 Summary

| Feature | Max Pooling | Average Pooling |

|---|---|---|

| Purpose | Keeps strongest features | Averages all features |

| Use case | Most common in modern CNNs | Smoother, less aggressive |

| Benefit | Reduces size and overfitting | Similar but keeps more subtle info |

✅ Conclusion

Pooling is a small but powerful part of Convolutional Neural Networks. It helps reduce complexity, improves performance, and allows CNNs to recognize patterns in images even when they shift slightly.

In most CNNs today, Max Pooling is the preferred choice, but understanding all types of pooling gives you more control and flexibility when designing your own deep learning models.